Caching is a vital technique in software development that enhances performance by temporarily storing copies of data that is frequently requested. By reducing the need to repeatedly fetch or compute the same data, caching improves response times, reduces server load, and enhances the user experience. Effective caching strategies help optimize resources and ensure scalability in high-traffic applications.

In this article, we’ll explore different caching strategies, how they work, and the best practices for implementing them in software development projects.

1. What is Caching?

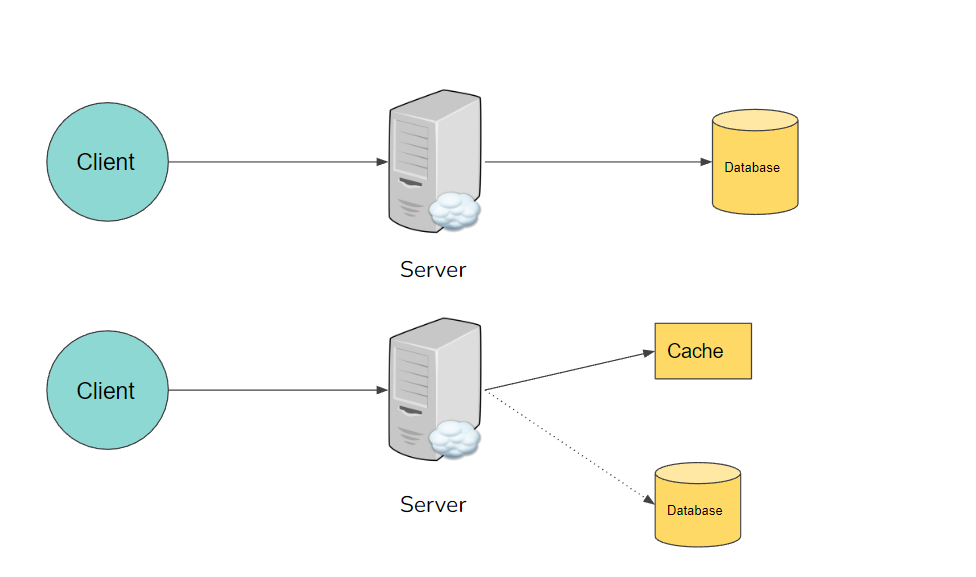

Caching refers to the process of storing copies of frequently accessed data in a temporary storage layer (the cache) so that future requests for that data can be served faster. Caches are usually placed close to the data consumers, such as in-memory stores or on edge servers, to minimize the time required to retrieve the data.

Common use cases of caching include:

- Database query results: To avoid running the same expensive queries multiple times.

- Web pages or assets: To reduce load times for frequently accessed web pages or images.

- API responses: To decrease the number of API calls by storing the responses temporarily.

2. Types of Caching

There are several types of caching, each suited to different purposes within a software system:

1. Client-Side Caching

In client-side caching, data is stored locally on the user’s device, reducing the need for network requests. Examples include:

- Browser caching: Storing website assets like HTML, CSS, JavaScript, and images in the browser cache.

- LocalStorage/SessionStorage: Storing small amounts of data in a user’s browser to persist state across sessions.

2. Server-Side Caching

Server-side caching stores frequently accessed data on the server to improve performance. Examples include:

- Database caching: Storing query results to avoid running the same database queries repeatedly.

- In-memory caching: Using memory-based stores like Redis or Memcached to store frequently accessed data for faster retrieval.

3. Reverse Proxy Caching

A reverse proxy cache sits between the client and the server, caching responses from the server and serving them to the client. This approach is used for content delivery networks (CDNs) and load balancers. Examples include:

- Varnish Cache: A reverse proxy caching server used to accelerate HTTP responses.

- CDNs: Content delivery networks like Cloudflare or AWS CloudFront cache static assets and deliver them closer to the client.

3. Popular Caching Strategies

1. Cache-Aside (Lazy Loading)

In the cache-aside strategy, data is loaded into the cache only when a request for it is made. If the data isn’t found in the cache (a cache miss), it is fetched from the database or another source, and then added to the cache for future use.

- Advantages: Simple and easy to implement; ideal when cache storage is limited.

- Disadvantages: Data is not pre-loaded, so the first request may have a higher latency due to a cache miss.

Example:

// Cache miss scenario

if (cache.contains(dataKey)) {

return cache.get(dataKey);

} else {

data = database.query(dataKey);

cache.put(dataKey, data);

return data;

}2. Write-Through Caching

In write-through caching, every write operation to the database is followed by an immediate write to the cache. This ensures that the cache always contains the most up-to-date data.

- Advantages: Data is always consistent between the cache and the database.

- Disadvantages: Can introduce some performance overhead due to the write operations being synchronized.

3. Write-Behind (Write-Back) Caching

In write-behind caching, write operations are first performed on the cache, and then asynchronously propagated to the database. This reduces the latency of write operations since the database update is deferred.

- Advantages: Faster write operations.

- Disadvantages: Risk of data loss if the cache fails before the database update is complete.

4. Time-to-Live (TTL)

TTL caching assigns an expiration time to each cache entry, after which the cached data is invalidated and needs to be refreshed from the source.

- Advantages: Helps prevent stale data by ensuring that cache entries are periodically updated.

- Disadvantages: Requires careful tuning of expiration times to balance freshness with performance.

5. Distributed Caching

In a distributed caching strategy, the cache is spread across multiple servers, which allows for greater scalability and fault tolerance. It is commonly used in large-scale applications where a single cache server may not suffice.

- Advantages: Scalable and resilient to single points of failure.

- Disadvantages: More complex to implement and manage.

4. Best Practices for Caching

1. Identify What to Cache

Not all data should be cached. Prioritize caching:

- Data that is expensive to retrieve or compute.

- Data that is frequently accessed.

- Static content or rarely changing data.

2. Avoid Stale Data

To avoid serving outdated or incorrect data from the cache:

- Use TTL to ensure that cached data expires and is refreshed regularly.

- Implement cache invalidation when data is updated in the database.

3. Monitor Cache Usage

Track cache hits, misses, and evictions to understand how effectively your caching strategy is performing. Use tools like Prometheus, Grafana, or built-in cache metrics from services like Redis or Memcached.

4. Cache Invalidation

Develop a robust strategy for invalidating outdated cache entries, ensuring that data consistency is maintained across the cache and the underlying data source.

5. Consider Cache Size Limits

Caches are limited by memory, so make sure to limit the size of the data stored in the cache. Implement Least Recently Used (LRU) or Least Frequently Used (LFU) eviction policies to remove old or infrequently accessed data when the cache is full.

6. Use Content Delivery Networks (CDNs)

For static assets like images, videos, and scripts, use CDNs to cache and distribute content closer to your users. This reduces load on your origin server and improves global content delivery speed.

5. Tools and Technologies for Caching

Here are some popular tools for implementing caching:

- Redis: An in-memory key-value store that supports a variety of data structures. It is commonly used for caching database query results and managing sessions.

- Memcached: A high-performance in-memory caching system used for speeding up dynamic web applications by storing data in memory.

- Varnish: A caching reverse proxy used to accelerate HTTP requests.

- CDNs: Services like Cloudflare, Akamai, or AWS CloudFront provide caching for static assets globally.

Conclusion

Implementing the right caching strategy is crucial for enhancing the performance and scalability of software applications. Whether using client-side, server-side, or reverse proxy caching, careful consideration of caching mechanisms and best practices ensures that your applications remain responsive and efficient. By incorporating tools like Redis, Memcached, and CDNs, and utilizing strategies like cache-aside and write-through caching, you can significantly reduce the time it takes to serve data to your users.

At TechsterTech.com, we implement advanced caching strategies tailored to your specific needs, ensuring that your software applications run efficiently while providing a seamless user experience.